Running Halmos by Default for Library Testing

If you are already "thinking in properties" when writing fuzz tests, I encourage you to run Halmos by default.

Introduction

In the upcoming Size Code4rena contest, auditors will see a mix of unit and fuzz tests with Foundry, invariant tests with Echidna, and property-based tests with Halmos.

To create the best “effort-to-reward ratio” in terms of security, the project structure was created using a TDD-like approach. It began with many unit tests ported from a Python reference implementation, then expanded with fuzz tests, and finally included invariant tests.

As I often advocate, I believe this order maximizes the likelihood of finding real and meaningful bugs in a fast development cycle. If projects have time and money, they should also invest in formal verification as a next step. In some cases, however, this order should be adjusted.

This post explores why running Halmos by default can not only save time but sometimes provide better guarantees of the code’s behavior than fuzzing.

Thinking Properties

I often refer to this great material from Certora, which shares a categorization of system properties that protocols can use when expanding on their list of invariants, even when writing fuzz tests.

After some time, this exercise becomes a second language for developers, who start improving their stateless fuzz tests to not only check for expected/actual values but also the maintenance of important invariants. With enough time, the fuzzer doesn't catch any bugs, and your confidence in the accuracy of the implementation is meant to deem it good enough.

One function that did not fare quite well in this approach was a binary search method for interpolating interest rates on a yield curve:

| // SPDX-License-Identifier: MIT | |

| pragma solidity ^0.8.13; | |

| interface IMath { | |

| /// @notice Find the index of `value` in the sorted list `array` | |

| /// @dev If `value` is below the lowest value in `array` or above the highest | |

| /// value in `array`, the function returns | |

| /// (type(uint256).max, type(uint256).max) | |

| /// @param array The sorted list to search | |

| /// @param value The value to search for | |

| /// @return low The index of the largest element in `array` that is less than | |

| /// or equal to `value` | |

| /// @return high The index of the smallest element in `array` that is greater than | |

| /// or equal to `value` | |

| function binarySearch(uint256[] memory array, uint256 value) external | |

| pure returns (uint256 low, uint256 high); | |

| } |

Like most software developers, one’s ability to flawlessly implement basic algorithms and data structures fades away when they stop going through beginner job interview processes. What is more, this binary search is a non-straightforward implementation, as it returns two indexes instead of one, and it is in a critical path of the protocol since you can't find a rate on a yield curve without linear interpolation. Simple fuzz tests were not making my anxiety go away, no matter how many audit firms would manually review this implementation.

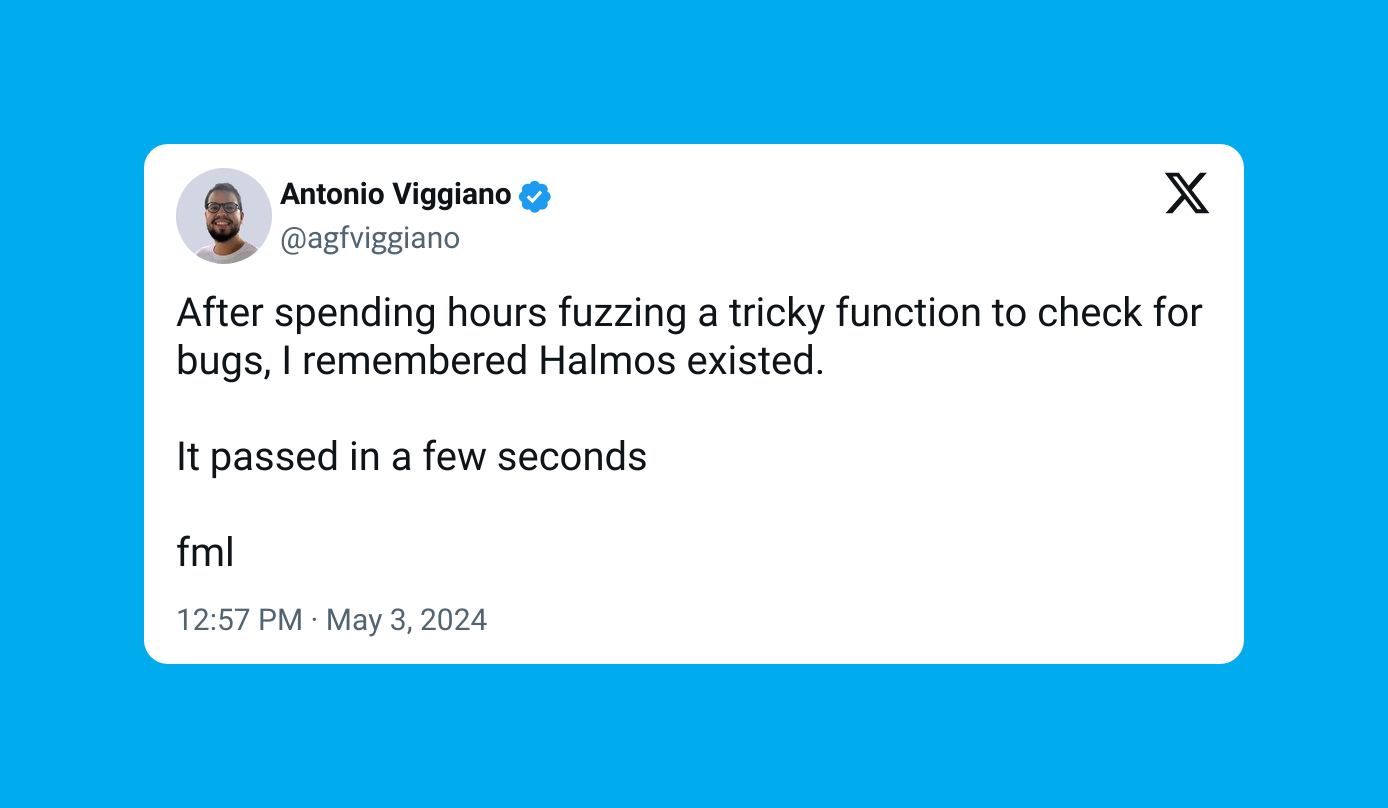

After some time tinkering again and again expanding the test coverage for this particular function, something came to mind. What if I used Halmos instead? After all, the fuzz tests were already done, the properties were already created, and with minimal effort, I could convert them to property-based tests and run Halmos. To my surprise, it passed without errors in a few seconds.

| contract MathTest is Test { | |

| function check_Math_binarySearch(uint256[] memory array, uint256 value) public { | |

| // array is strictly increasing | |

| for (uint256 i = 0; i < array.length - 1; i++) { | |

| vm.assume(array[i] < array[i + 1]); | |

| } | |

| (uint256 low, uint256 high) = Math.binarySearch(array, value); | |

| if (value < array[0] || value > array[array.length - 1]) { | |

| // not found | |

| assertEq(low, type(uint256).max, "not found"); | |

| assertEq(high, type(uint256).max, "not found"); | |

| } else { | |

| assertGe(low, 0, "low is within bounds"); | |

| assertLe(low, array.length - 1, "low is within bounds"); | |

| assertGe(high, 0, "high is within bounds"); | |

| assertLe(high, array.length - 1, "high is within bounds"); | |

| assertLe(low, high, "low <= high"); | |

| assertLe(array[low], value, "array[low] <= value <= array[high]"); | |

| assertLe(value, array[high], "array[low] <= value <= array[high]"); | |

| if (value == array[low]) { | |

| assertEq(low, high, "low == high if the value is in the array"); | |

| } | |

| } | |

| } | |

| } |

This anecdote underscores how security researchers can shift their mentality of using formal verification as a last step and instead adapt case by case. This post advocates for using Halmos by default in some situations until you find a real blocker and need to resort to faster yet less definite tools.

In particular, libraries can benefit the most, since they are often simpler, resulting in fewer paths for the tool to explore. Additionally, after some recent improvements made by the maintainers, it should be running much faster than before, addressing a common concern with symbolic execution and its potential to run indefinitely.

Conclusion

Incorporating Halmos into your default testing routine, especially for libraries, can lead to significant improvements in efficiency and confidence. By leveraging the strengths of symbolic execution alongside traditional fuzzing, security researchers can achieve a more robust verification process.